Why Inverting AI Workflows Is Key to Enterprise Accuracy

How leveraging human judgment and reversing traditional AI workflows can overcome dirty data challenges.

In this post, I want to highlight a persistent challenge that often stalls efforts to improve AI accuracy in enterprise environments. While many acknowledge that insufficient data can be a limiting factor—especially when proprietary information is needed to enhance a general-purpose large language model (LLM)—the real issue goes beyond mere scarcity. To achieve top-tier domain performance, enterprises may need to fine-tune LLMs so that they internalize specialized knowledge and context. Unlike Retrieval-Augmented Generation (RAG) or prompt-based methods, which can inject additional information at inference time without altering the model’s parameters, fine-tuning permanently integrates the provided training data into the model’s internal representations.

This direct integration makes data quality crucial. Fine-tuning can deliver significant improvements in accuracy and domain alignment, but only if the training data is both reliable and relevant. Unfortunately, many enterprise datasets—sourced from CRMs, ERPs, and other operational systems—are incomplete, inconsistent, and lack clear indicators of quality. These repositories often contain “dirty” data that, even after cleaning, remains difficult to differentiate in terms of importance or strategic value. Merely connecting an LLM to these data sources, hoping they will serve as a source of refined knowledge, is insufficient. Without a methodology to identify which subsets truly matter, organizations risk overwhelming the model with undifferentiated content, ultimately diluting its domain expertise rather than enhancing it.

Only with robust frameworks for scoring, filtering, and weighting data can organizations ensure that their fine-tuned LLMs prioritize the most accurate, timely, and contextually relevant information. In the absence of these data differentiation strategies, the promise of fine-tuning remains out of reach, and valuable proprietary knowledge fails to translate into tangible performance gains for the AI model.

An Even Bigger Problem: Human Knowledge Gaps

Even if enterprise data could be perfectly cleaned, quantified and integrated, it still wouldn’t guarantee optimal AI-driven decisions. A significant portion of critical knowledge remains locked in human minds—varied in expertise, judgment, and subjectivity. This disparity is why organizations hold meetings, rely on structured decision-making processes, and depend on human interpretation to bridge data with hopefully sound judgment.

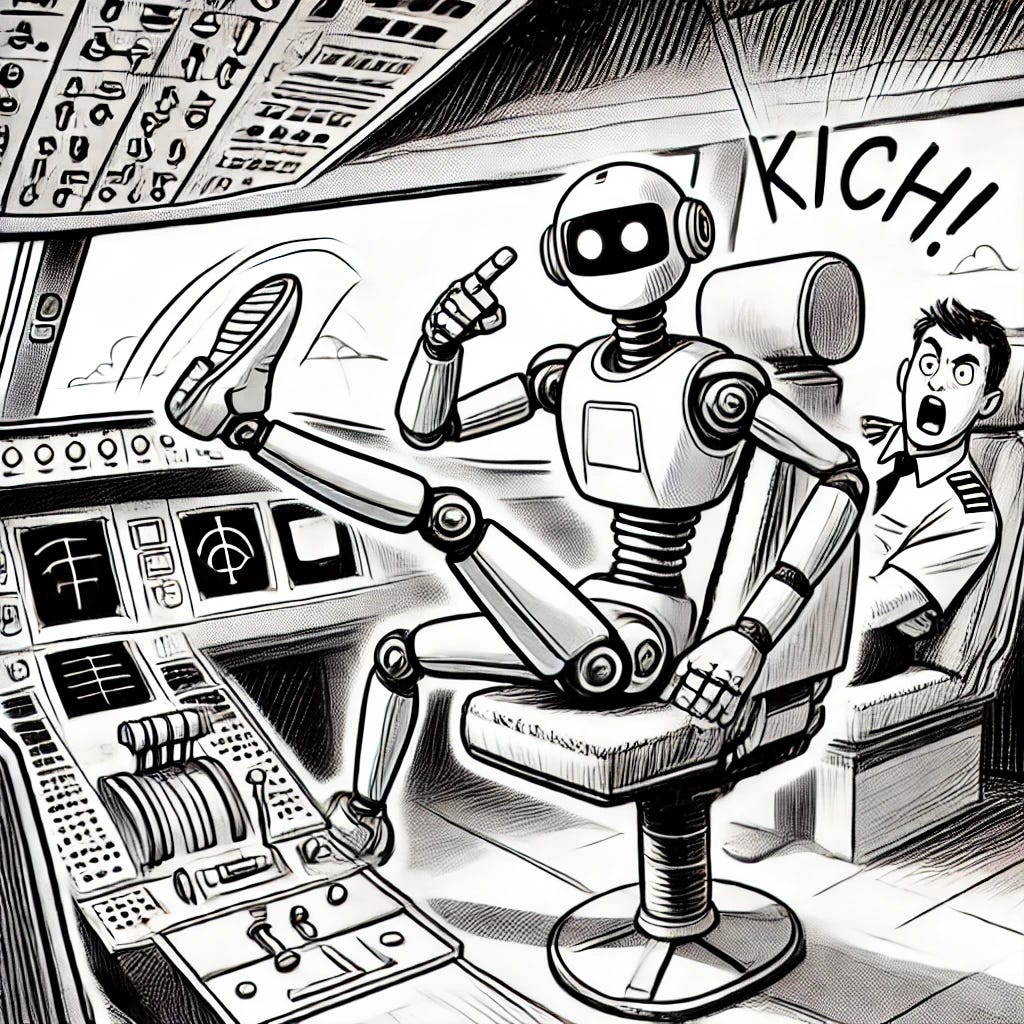

To overcome this challenge, enterprises must rethink their AI workflows. Rather than AI serving as a co-pilot to human decision-makers, it should take the role of the pilot. In this model, humans shift from being the central drivers of decision-making to acting as data providers or experts who contribute insights only when the AI specifically requests them. The ideal scenario minimizes human intervention, enabling the AI to steer autonomously.

Extending the aviation analogy, this transition must be continuous. Instead of a static handover, the AI system should operate within a near real-time decision loop, constantly refining its approach based on new information and feedback. In doing so, the enterprise moves toward a state where human judgment is available on demand, but rarely required at the forefront.

A Real-World Example: Customer Service Chatbots

Let’s consider a simple but real-world scenario: a customer service chatbot. Imagine this bot has access to all relevant information—customer databases, Jira tickets, FAQs, and documented workflows. For simplicity, assume it achieves perfect accuracy in intent detection.

What failure modes remain?

The data is incomplete (e.g., it doesn’t know the answer).

The data is wrong (e.g., outdated FAQs or incorrect workflows).

In these scenarios, the fallback is often to engage a human. This is illustrative because it highlights what the AI system should ideally do: engage humans strategically when gaps arise.

Addressing Failure Scenarios

Two critical questions emerge:

What should happen next after a failure is detected and a human is engaged?

How could the failure have been avoided in the first place?

For the first question, the optimal response is to escalate the issue to a knowledgeable human (or multiple humans for high-stakes decisions), supported by the AI system in data lookup and verification. These escalated humans are likely not the same as the first-line responder—they must have the expertise to guide the system to the best resolution. Think of this as a "pager duty" for data management.

For the second question, prevention lies in robust simulations. For example, problem queries could be simulated using past customer interactions, exploring various branches of inquiry to proactively identify gaps in data or workflows.

This approach scales beyond customer service to more complex systems, such as financial modeling, supply chain management, and strategic decision-making.

Scaling the Solution

Inverting traditional workflows—placing AI in the pilot’s seat and using humans as expert data sources—can fundamentally reshape enterprise operations. When properly implemented, this approach not only taps into the best of human expertise on demand, but also ensures that AI systems maintain efficiency, consistency, and domain relevance over time. Yet, to realize these benefits, organizations must couple this human-in-the-loop paradigm with robust data-centric strategies, particularly when it comes to fine-tuning AI models. A carefully curated and differentiated dataset ensures that the AI’s internal parameters remain aligned with business goals and processes and reflective of the most reliable, high-quality information.

For this approach to succeed, enterprises should:

Automatically Query Human Input:

Treat humans as dynamic, on-call domain experts who provide clarifications, judgments, and domain-specific insights exactly when the situation demands. This continuous, as-needed engagement ensures that fine-tuned models incorporate high-value human knowledge without depending too heavily on subjective inputs.Simulate Task Inputs:

Integrate continuous simulation runs. By proactively refining data quality and selection, the AI system stays prepared to handle new inputs effectively.Continuously Update Data and Models

Implement iterative pipelines that not only refine data selection, weighting, and filtering, but also continuously adjust the model’s fine-tuning parameters as it encounters new scenarios.

Enterprise AI systems can’t succeed if they’re treated as mere co-pilots. The future of AI in the enterprise requires rethinking workflows, prioritizing AI as the primary decision-maker, and leveraging humans as on-demand knowledge sources—brought into the loop only when their judgment or domain expertise is truly needed. This shift in perspective not only demands robust strategies for filtering, scoring, and fine-tuning proprietary data, but also calls for continuous refinement of how organizations manage, interpret, and integrate information into AI models.

By giving the AI system the reins, enterprises can better address the persistent issues of “dirty” data, the hidden complexities of human judgment, and the inherent challenges of operating at scale. The goal is not to diminish human input, but to transform it into a strategic resource that the AI can query as needed. This inversion—where humans assist AI, rather than the other way around—represents the logical next step in unlocking the full potential of AI within the future enterprise landscape.

Ruslan,

This is an excellent and well-structured analysis. As someone who deals with these specific challenges daily, I can fully appreciate the nuanced understanding you've brought to this discussion.

The symbiosis between humans and a highly optimized AI co-pilot is indeed critical. While the vision of AI as the primary decision-maker is compelling, I believe the ideal balance lies in dynamic collaboration. A well-optimized co-pilot not only enhances efficiency but also ensures that the system benefits from the contextual judgment and strategic insight that humans uniquely provide.

Your emphasis on robust data differentiation strategies resonates strongly. The challenge isn't just cleaning the data but ensuring it is strategically curated to align with organizational goals. Additionally, I agree that AI systems must be designed to engage humans strategically—leveraging their expertise only when gaps arise.

Thank you for sharing this insightful perspective!

The challenge of knowledge gaps is real. Getting intrinsic knowledge out of human heads and training AI systems on this knowledge is a huge obstacle - we are talking about the knowledge of accountants, customer service managers, hiring managers, stock brokers, doctors, etc.

"Rather than AI serving as a co-pilot to human decision-makers, it should take the role of the pilot." I completely agree, but for humans to cooperate, it has to be presented a bit nicer. Human in the loop approach ensures that AI keeps learning from mistakes, while humans have complete ability to provide feedback on every AI output, as well as update the policy. Approve high-risk decisions. Keep AI on track by managing the business rules/policy and retraining AI systems based on human expert feedback.

I am currently experiencing that humans hesitate to collaborate with AI engineering teams in fear of AI replacing their jobs or messing up business processes. Humans sometime would provide negative feedback but resist explaining how to improve AI outputs. "I don't like the answer" is not very helpful. When you redefine human jobs as managers of AI agents, where each human gets several AI assistants, in this case humans are more likely collaborate with AI engineers on improving AI systems. When humans see their jobs evolving into more interesting and higher business impact roles, they begin to see a brighter future for themselves as managers of AI agentic workforce. If they see themselves as temporary gap fixers and knowledge sources, they are less likely to share that knowledge sincerely.